Hidden Worlds uses Artificial Intelligence and Augmented Reality to examine gender through the lens of computer vision. These works use a computer trained on Greek and Roman statuary to generate its own which I interpret in my own way. I use another AI to write descriptive content for each work and then generated music to create a multi-media interactive installation.

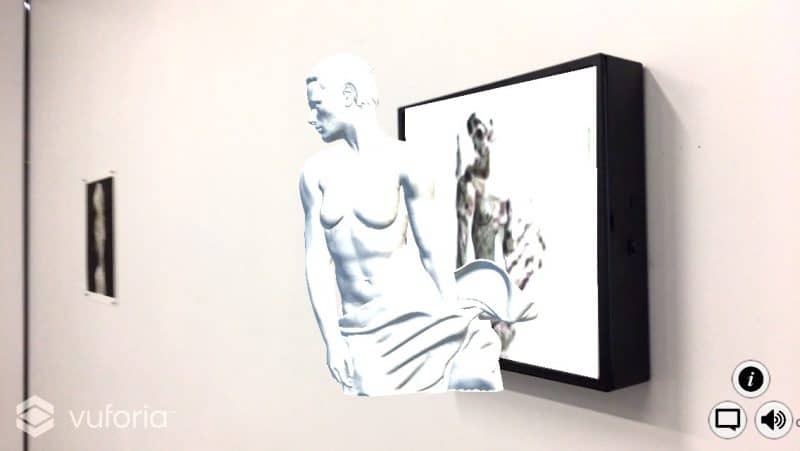

Can computers see gender? Without being trained in traditional binary notions of gender what can AI produce? And how do we interpret the results? J. Rosenbaum presents a paper on their project Hidden Worlds, an exhibition of Artificial Intelligence Computer Generated artworks and mobile Augmented Reality technologies to see gender through the lens of computer vision. Rosenbaum’s last works used AI to interpret their creations, this time the computer creates the art and Rosenbaum interprets the output. A Generative Adversarial Network trained in thousands of images of Greek and Roman statuary worked for weeks to create its own. Rosenbaum then explored the output to find the truth inside the computer generated work and reveal that to the viewer. Another Neural Network looked at the works and wrote poetry based on what it saw through an image classifier. This creates the soundscape inside the app. Viewers see light boxes and watch them come to life inside the app as the computer generated work is transformed and reinterpreted by human eyes and hands. The language is alien, computer driven showing a collaborative effort between human and machine. This highly experimental work invites questions about computers creating art, about how machines see humans and gender and idealized beauty.

Hidden Worlds

Hidden Worlds was at Testing Grounds from September 12 to 22 as part of Critical Mass for Melbourne Fringe Festival.

Images

Technical information

I constructed Hidden Worlds by chaining together different machine Learning projects and training them in my own specific datasets to get the results I was after. This is an initial experimental round in the development of a greater project around viewing gender through the lens of computer vision.

The concept driving these works involved blending the idealized figures of Greek and Roman statuary using a DCGAN. This marks my first venture into the realm of Generative Adversarial Networks, and it certainly won’t be my last. Generative Adversarial Networks learn from source material, a dataset. A GAN works by using a generator and a discriminator. The generator creates images while the discriminator critiques the images, teaching the generator which images most closely resemble the dataset. The GAN creates sample images, which I have selected these works from, in the latent space of the creation process, I wanted something more abstract, open to interpretation. From there, I created 3D models as an interpretation of the GAN’s output.

The DCGAN I used was programmed by Taehoon Kim using the Tensorflow framework. I added some customizations to the code and my own personal dataset.

From there I worked with Ryan Kiros’ Skip Thoughts and Neural Storyteller to create captions for the works. using MSCOCO Image recognition and captioning, neural storyteller creates a small story. Abstract thoughts took center stage as the main success of this system. It actively rendered gender as a completely fluid concept, making this writing inherently nonbinary—an ideal fit for my work.

Generating abstract thoughts marked the system’s main success, actively rendering gender as a fluid concept, aligning with the nonbinary nature of my work. Crafting voices with Apple’s voice synthesis and composing music tracks in LangoRhythm—a midi piano music writer—assigned note values to letters and durations to vowels. This innovative approach results in musical compositions, each track inspired by dynamically generated captions, forging a connection between visual and auditory elements.

I united these elements in Unity to craft an Augmented Reality Experience.